Operating Support Program Evaluation (2011-12 – 2017-18)

Final Evaluation Report

June 2023

At the Canadian Institutes of Health Research (CIHR), we know that research has the power to change lives. As Canada's health research investment agency, we collaborate with partners and researchers to support the discoveries and innovations that improve our health and strengthen our health care system.

Canadian Institutes of Health Research

160 Elgin Street, 9th Floor

Address Locator 4809A

Ottawa, Ontario K1A 0W9

This publication was produced by the Canadian Institutes of Health Research. The views expressed herein do not necessarily reflect those of the Canadian Institutes of Health Research.

Acknowledgements

Special thanks to all participants in this evaluation through end of grant reports and case studies, CIHR’s Research, Knowledge Translation and Ethics (RKTE) Portfolio – Program Design and Delivery, Operations Support Branch and Science Policy Branches, CIHR’s Financial Planning Unit, CIHR’s Results and Impact Unit and Nathalie Kishchuk of Program Evaluation and Beyond.

The OSP Evaluation Team

Shevaun Corey, Angela Mackenzie, Kwadwo (Nana) Bosompra, Kimberly-Anne Ford, Jenny Larkin, Hayat El-Ghazal, Alexandra Leguerrier (student), Sabrina Jassemi (student), Michael Goodyer, and Ian Raskin.

For more information and/or to obtain copies, please contact evaluation@cihr-irsc.gc.ca.

Table of Contents

- List of Tables

- List of Figures

- List of Acronyms

- Executive Summary

- Program Profile

- Description of Evaluation

- Evaluation Findings

- Conclusions & Recommendations

- Appendix A - Tables & Figures

- Appendix B - Methodology

- Appendix C – References & End Notes

List of Tables

- Table 1: Operating Support Program Expenditures (2011-12 to 2017-18) in Millions

- Table 2: Knowledge Products, Grant Duration and Amount by Pillar

- Table 3: Knowledge Products, Grant Duration and Amount by Sex

- Table 4: Research Staff and Trainees Involved in OOGP Grants

- Table 5: OSP Administrative Costs as Percent of Total Program Expenditure, 2010-11 to 2017-18

- Table 6: OSP Costs per Application and per Grant Awarded, 2010-11 to 2017-18

List of Figures

- Figure A: Timeline of CIHR Reforms Process, 2009-2017

- Figure B: Application pressure and success rates across OOGP, Foundation and Project Grant programs, 2006-07 to 2017-18

- Figure C: Average Number of Publications and Presentations by Pillar

- Figure D: Average Number of Publications and Presentations by Sex

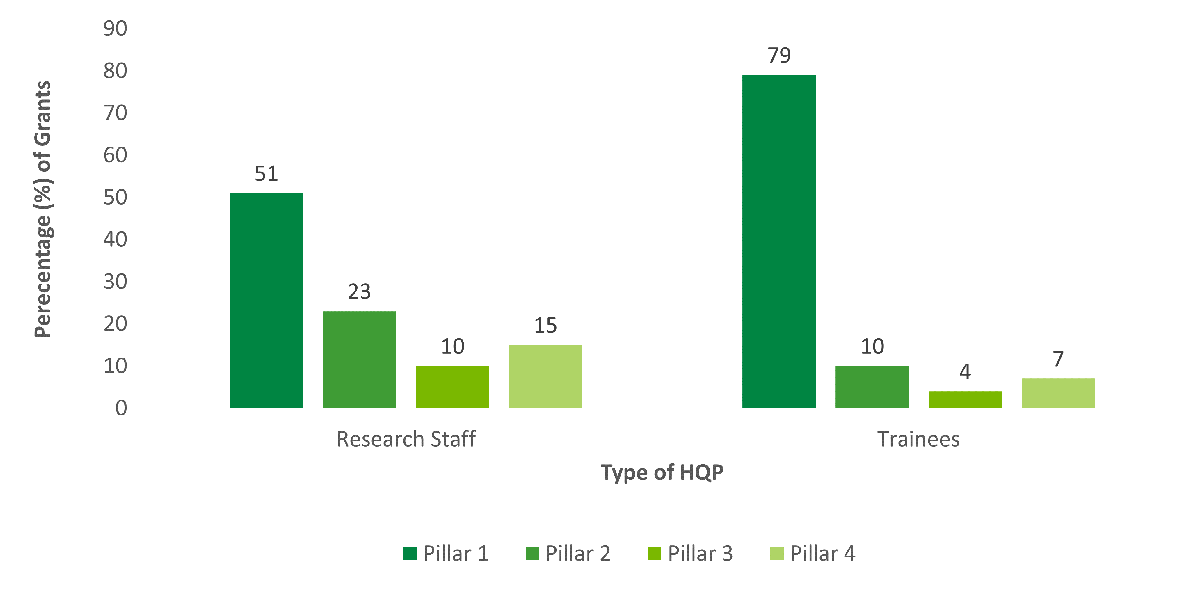

- Figure E: Percentage of Grants Involving Research Staff and Trainees by Pillar

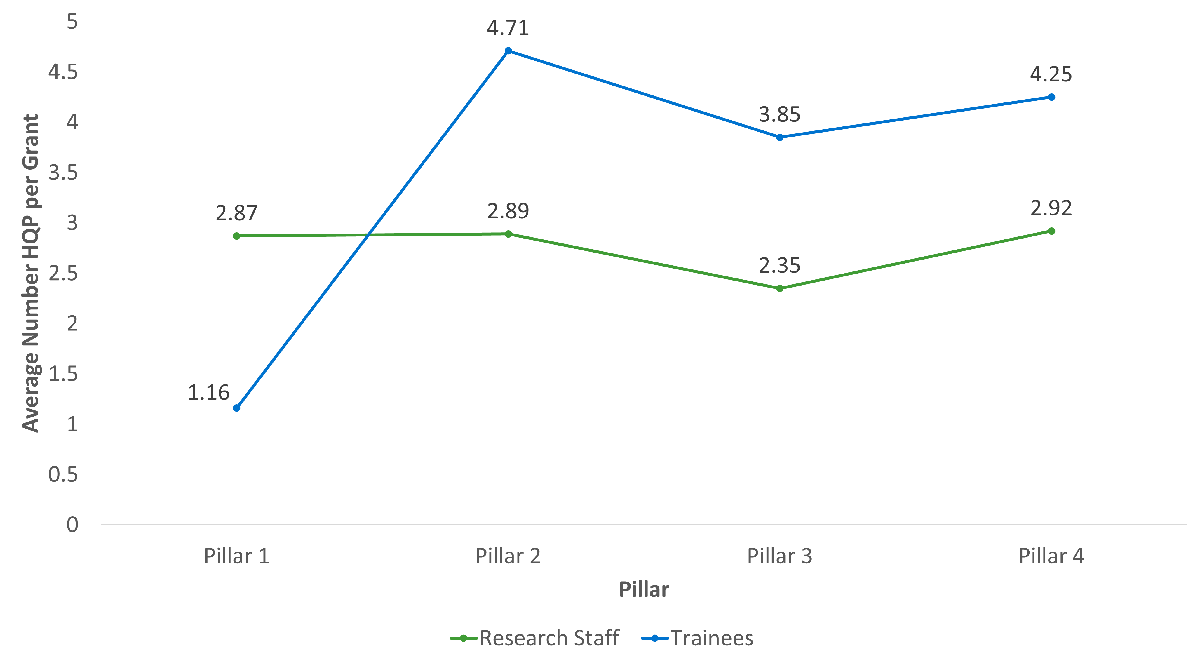

- Figure F: Average Number of Research Staff and Trainees per Grant by Pillar

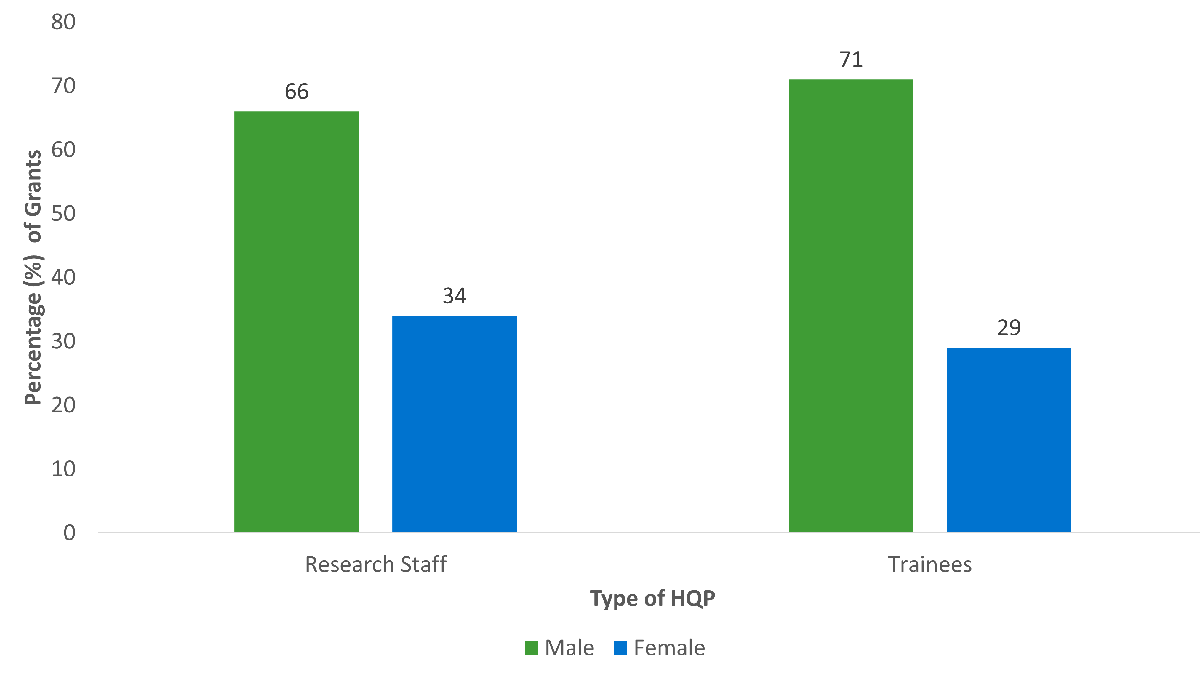

- Figure G: Percentage of Grants Involving Research Staff and Trainees by Sex of NPI

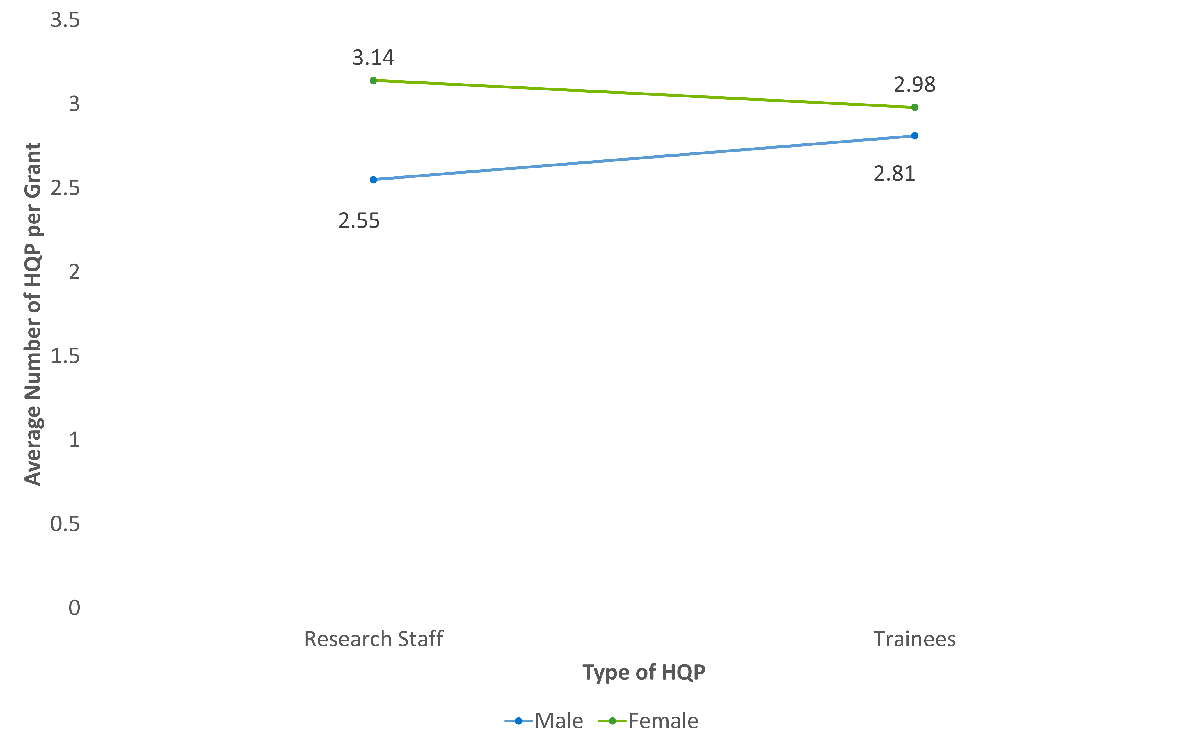

- Figure H: Average Number of Research Staff and Trainees per Grant by Sex of NPI

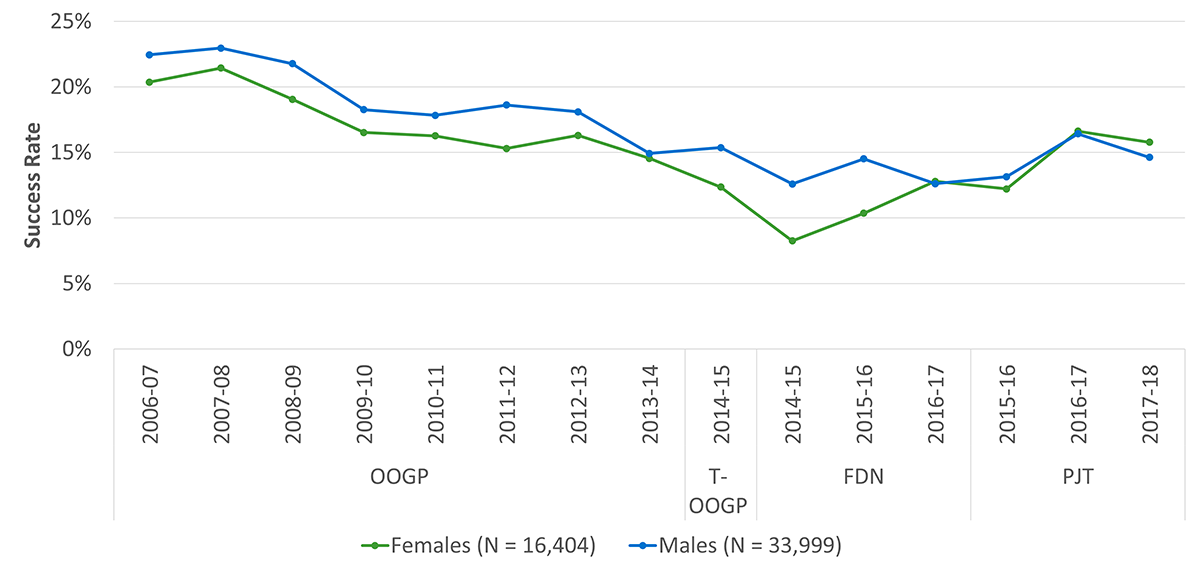

- Figure I: OSP Success Rates by Sex

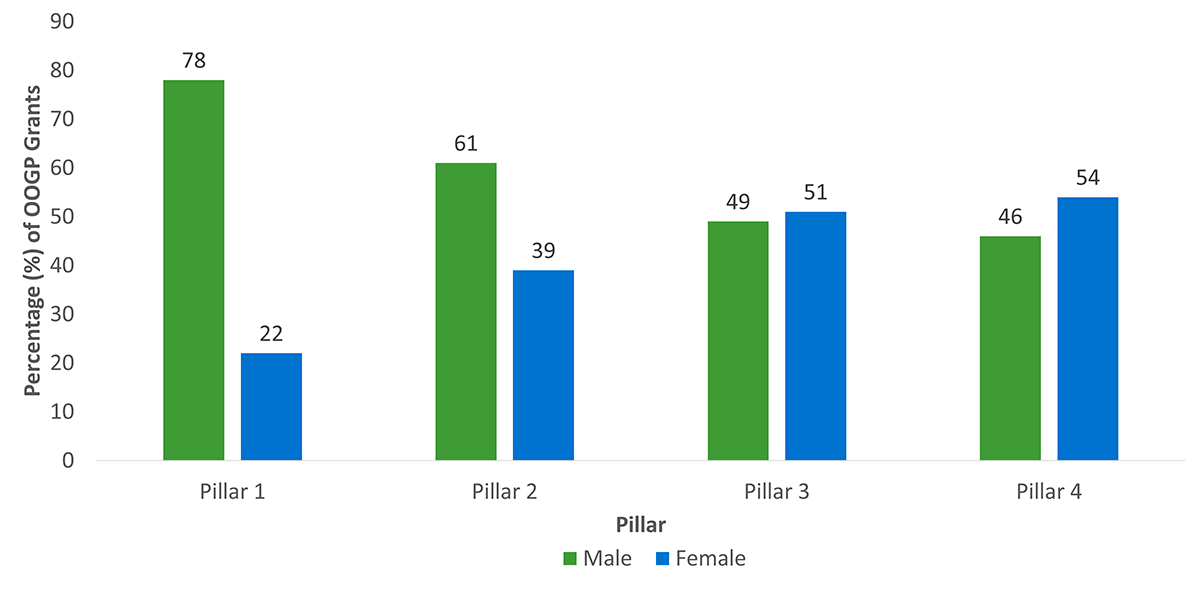

- Figure J: Proportion of OOGP Grants by Sex and Pillar

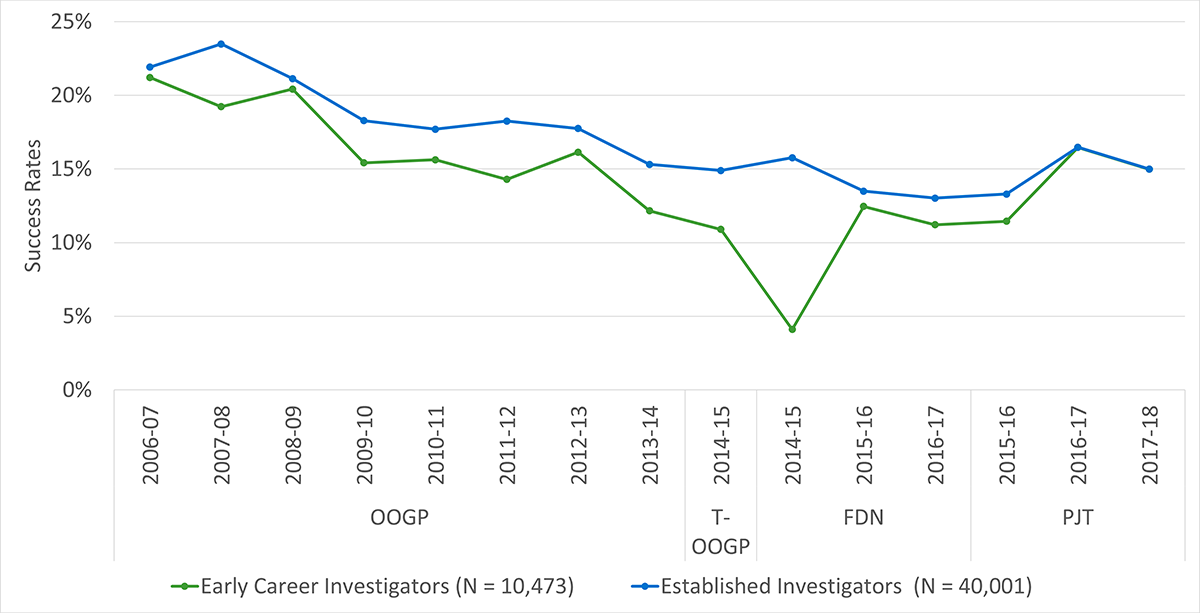

- Figure K: OSP Success Rates by Career Stage

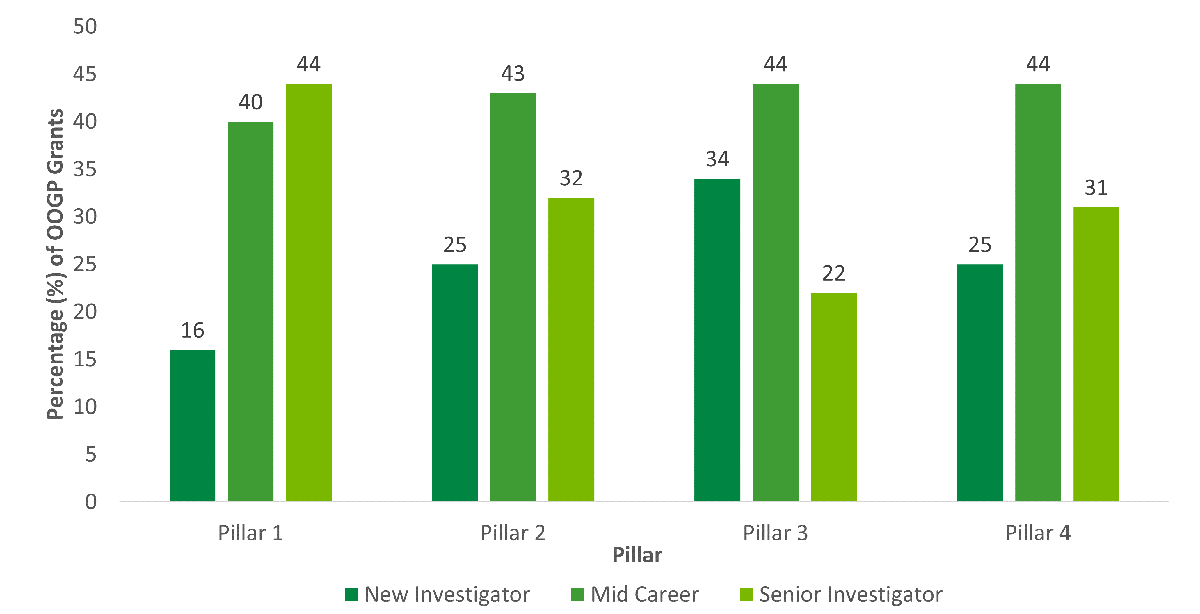

- Figure L: Proportion of OOGP Grants by Career Stage and Pillar

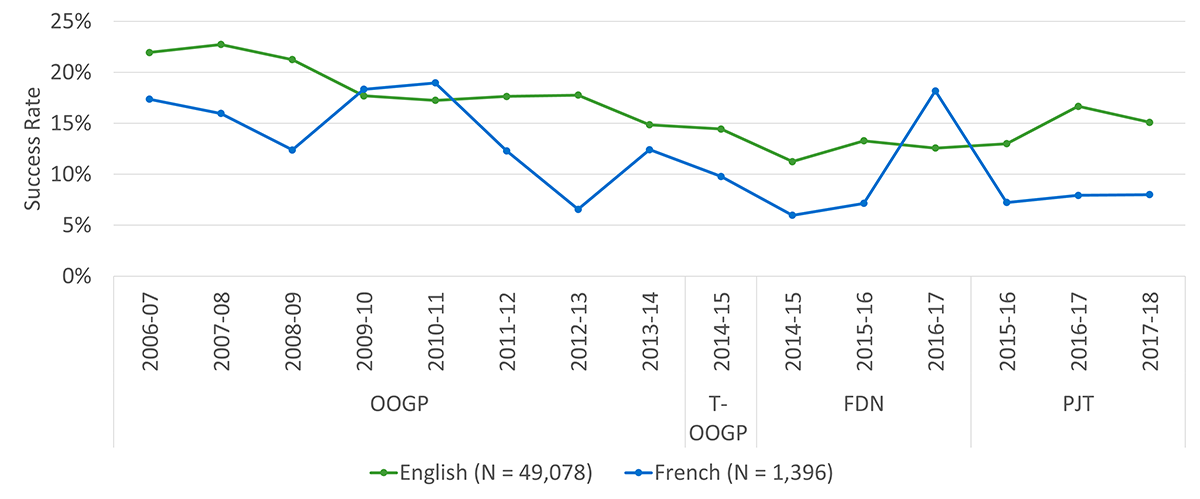

- Figure M: OSP Success Rates by Preferred Language

List of Acronyms

| Acronym | Meaning |

|---|---|

| ARC | Average of Relative Citations |

| ARIF | Average Relative Impact Factor |

| CAHS | Canadian Academy of Health Sciences |

| CIHR | Canadian Institutes of Health Research |

| DORA | San Francisco Declaration on Research Assessment |

| DRF | Departmental Results Framework |

| ECI | Early Career Investigator |

| FGP | Foundation Grant Program |

| FPA | Funding Policy and Analytics |

| HQP | Highly Qualified Personnel |

| IIR | Investigator-Initiated Research |

| NPI | Nominated Principal Investigator |

| OECD | Organisation for Economic Co-operation and Development |

| OOGP | Open Operating Grant Program |

| OSP | Operating Support Program |

| PDD | Program Design and Delivery |

| PDR | Priority-Driven Research |

| PGP | Project Grant Program |

| PIP | Program Information Profile |

| PREP | Peer Review Expert Panel |

| RIU | Results and Impact Unit |

| RKTE | Research, Knowledge Translation and Ethics |

| RRS | Research Reporting System |

| SCIO | Subcommittee on Implementation and Oversight |

Executive Summary

Program Overview

Approximately three-quarters of the budget at the Canadian Institutes of Health Research (CIHR) is used to support investigator-initiated research (IIR), which are projects created by individual researchers and their teams. At the time of the evaluation, the funding for IIR was provided primarily through the Project Grant Program (PGP) and Foundation Grant Program (FGP), which replaced the Open Operating Grant Program. All three programs made up the Operating Support Program (OSP). In addition to the OSP, funding is also provided through Tri-Council career, and training programs (e.g., Canada Research Chairs, Banting Postdoctoral Fellowships, and Vanier Canada Graduate Scholarships). Beginning in 2010, CIHR started the process of reforming its investigator-initiated programs and related peer review processes. However, this took place in the context of constrained funding and experienced implementation challenges and was met with mixed reactions from the health research community. The FGP and PGP underwent many changes and in April 2019 CIHR made the decision to sunset the Foundation Grant Program.

Evaluation Objective, Scope and Methodology

The objective of this evaluation was to provide CIHR senior management with valid, insightful, and actionable findings about the performance of the former Open Operating Grants Program (OOGP) as well as the relevance and design and delivery of the successor programs – the FGP and PGP. The evaluation covered the period from 2011-12 to 2017-18 and is the second evaluation of the OOGP. Evaluation findings were triangulated across a variety of data sources, including analyses of documents, data, and end of grant reports along with bibliometric analysis. The evaluation meets the requirements of the Treasury Board of Canada’s Secretariat (TBS) under the Policy on Results and the Financial Administration Act.

Given that CIHR’s OSP funding is currently only provided through the PGP, the recommendations were focused on this program. It is important to note that the evaluation was completed in fiscal year 2019-20, with the approval and web posting of this report as well as the development of the management action plan delayed due to the COVID-19 pandemic. It should also be acknowledged that a number of important changes have taken place at CIHR since the completion of this report, most notably the implementation of the CIHR’s 2021-2031 Strategic Plan which has resulted in a number of key actions related to advancing research excellence, building health research capacity, and integrating evidence in health decisions.

Key Findings

Overall, the evaluation found that funding investigator-initiated research remains an effective means to support health research and build health research capacity. The following key findings relate to the relevance, performance, and design and delivery of the OSP.

The OSP addressed a continued need for investigator-initiated health research

Given the nature and extent of the investment in the OSP, CIHR is addressing the continued need for the investigator-initiated health research. The evaluation found that CIHR investments in the OSP are aligned with Government of Canada priorities, which are supported by the priorities of Canada’s Science Vision, the Fundamental Science Review, and the Federal Budget (2018 and 2019). Broadly, the OSP aligns with the CIHR Act, roles and responsibilities, and the strategic directions of Roadmap II (the strategic plan in place during the period under review), specifically promoting excellence, creativity and breadth in research.

The OSP contributed to advancing knowledge creation and building health research capacity

The evaluation found that the OSP has been attracting and funding research excellence, Specifically, OOGP-funded researchers and FGP and PGP applicants are more productive and impactful than health researchers in Canada and other OECD countries. The evaluation also found that OOGP funding across pillars, although majority are Biomedical grants, has successfully facilitated the creation, dissemination, and use of health-related knowledge (mainly within academia), as well as contributed to building Canadian health research capacity by increasing the number of researchers and trainees indirectly supported by these grants.

OOGP funded research results have demonstrated limited translation of knowledge beyond academia, longer-term health, and socio-economic impacts

Despite program objectives, as well as the objectives and priorities of the CIHR Act and strategic plan (Roadmap II), the evaluation found that less than half of OOGP grants involve and impact stakeholders beyond researchers and study stakeholders as reported by NPIs through end of grant reports. Similarly, less than 15% of OOGP grants resulted in the translation of knowledge beyond academia, longer-term health impacts, or socio-economic impacts.

CIHR needs to better define and align the objectives of the PGP in relation to the CIHR Act given that FGP has been sunset

The OSP Program has undergone many changes since the launch of the new programs under the reforms, with several elements not delivered as planned (e.g., reviewer matching software, College of Reviewers) and with noted implementation challenges (recommendations from the Internal Audit Consulting Engagement, the 2016 Working Meeting with Minister of Health, and the PREP). Despite broad alignment of the OSP with CIHR’s Act, the evaluation shows that the current objectives of the PGP lack alignment with the Act, specifically regarding building Canadian health research capacity. Capacity building was a specific objective for both sunset programs (OOGP and FGP). Given that the PGP is the only remaining investigator-initiated program, a review of objectives to ensure alignment with the Act is needed.

CIHR needs to improve monitoring and assessment of the outcomes and impacts of its investigator-initiated research

While there is a wealth of application, competition, and implementation data available for the OSP (e.g., surveys about the application and decision processes), there is currently a lack of output/outcome data being collected to assess progress toward expected outcomes beyond the end of grant report (which is only administered 18 months post grant expiry). Furthermore, the evaluation shows that there are concerns about the availability and reliability of the data from the current end of grant report (e.g., self-report; low response rates; variability in completion times; overall length, structure, and type of questions included); thereby, limiting the ability to accurately assess whether OSP programs are effectively achieving their objectives. Although CIHR is making advances in data governance, challenges with data ownership and management (i.e., multiple units are responsible for the collection and dissemination of data) further affect the ability to monitor and assess program performance.

CIHR needs to ensure funding decisions are made equitably

The evaluation showed that there are differences in funding and outcome characteristics by pillar, gender, career stage across individual OSP programs (OOGP, FGP, PGP) that need to be considered in the design and delivery of the PGP going forward. Although OSP funds researchers across pillars, the majority are from Pillar 1 (Biomedical). Male researchers have higher success rates than female researchers, and research shows sex and gender biases towards females and women in funding decisions specifically related to the OOGP and FGP. Early career researchers have lower success rates compared to mid- and senior career researchers and English versus French language applications are generally more successful. Recently, CIHR has taken steps to address inequities such as the equalization of success rates across career stages for the PGP. CIHR is committed to addressing any unconscious biases in its processes to ensure equitable access to research funding (e.g., CIHR’s Equity Strategy, Tri-Agency Statement on Equity, Diversity and Inclusion, Tri-agency Equity, Diversity and Inclusion Action Plan).

Recommendations

Given the programmatic shifts in the OSP, most notably the sunset of the FGP, the evaluation makes three recommendations aimed at improving the design and delivery and performance of the Project Grant Program.

Recommendation 1

CIHR should revise the PGP objectives to ensure they are clearly defined, fully aligned with, and support key aspects of the CIHR Act related to building Canadian health research capacity.

Recommendation 2

CIHR needs to ensure that investigator-initiated research funding is distributed as equitably as possible while minimizing the potential for peer review bias. The design and implementation of investigator-initiated grants must account for differences within the health community observed by the evaluation (e.g., pillar, sex, career stage and language) and well as in the research more broadly.

Recommendation 3

CIHR needs to improve the monitoring and assessment of activities and investments in investigator-initiated research.

- CIHR needs to enhance the way performance data is collected related to capacity building (e.g., indirect support of trainees), knowledge translation beyond academia (i.e., informing decision making), collaborations, health impacts, and broad socio-economic impacts to better understand the full impact of grant funding.

- CIHR needs to revise the current end of grant reporting template and process in order to improve the availability, accuracy, and reliability of the data collected.

- CIHR should consider additional ways to collect data beyond end of grant reports via interim reporting as well as longer term follow-up to assess impact.

Program Profile

Context

As stated in the CIHR Act, the CIHR mandate is to “excel, according to internationally accepted standards of scientific excellence, in the creation of new knowledge and its translation into improved health for Canadians, more effective health services and products and a strengthened Canadian health care system.”CIHR is the major Government of Canada funder of research in the health sector and classifies its research across four “pillars” of health research: biomedical; clinical; health systems/services; and population health. CIHR invests approximately $1 billion dollars in health research each year. This investment supports both investigator-initiated and priority-driven research.

CIHR classifies investigator-initiated research (IIR) as research where individual researchers and their teams develop proposals for health-related research on topics of their own choosing. Approximately three-quarters of CIHR's $1 billion budget are used to support IIR through its core programs (i.e., OOGP, FGF, and PGP) as well as through Tri-Council career and training programs (e.g., Canada Research Chairs, Banting Postdoctoral Fellowships, and Vanier Canada Graduate Scholarships). The balance is spent on priority-driven research (PDR), which refers to research in areas identified as strategically important by the Government of Canada; in this case, themed calls for research proposals are made.

Evolution of Investigator Initiated Programming at CIHR

Until 2014, the Open Operating Grants Program (OOGP) was CIHR’s primary mechanism used to support IIR. The specific objectives of the OOGP were to contribute to the creation, dissemination and use of health-related knowledge, and to help develop and maintain Canadian health research capacity. These objectives were targeted by providing support for original, high-quality projects or programs of research, proposed and conducted by individual researchers or groups of researchers, in all areas of health.

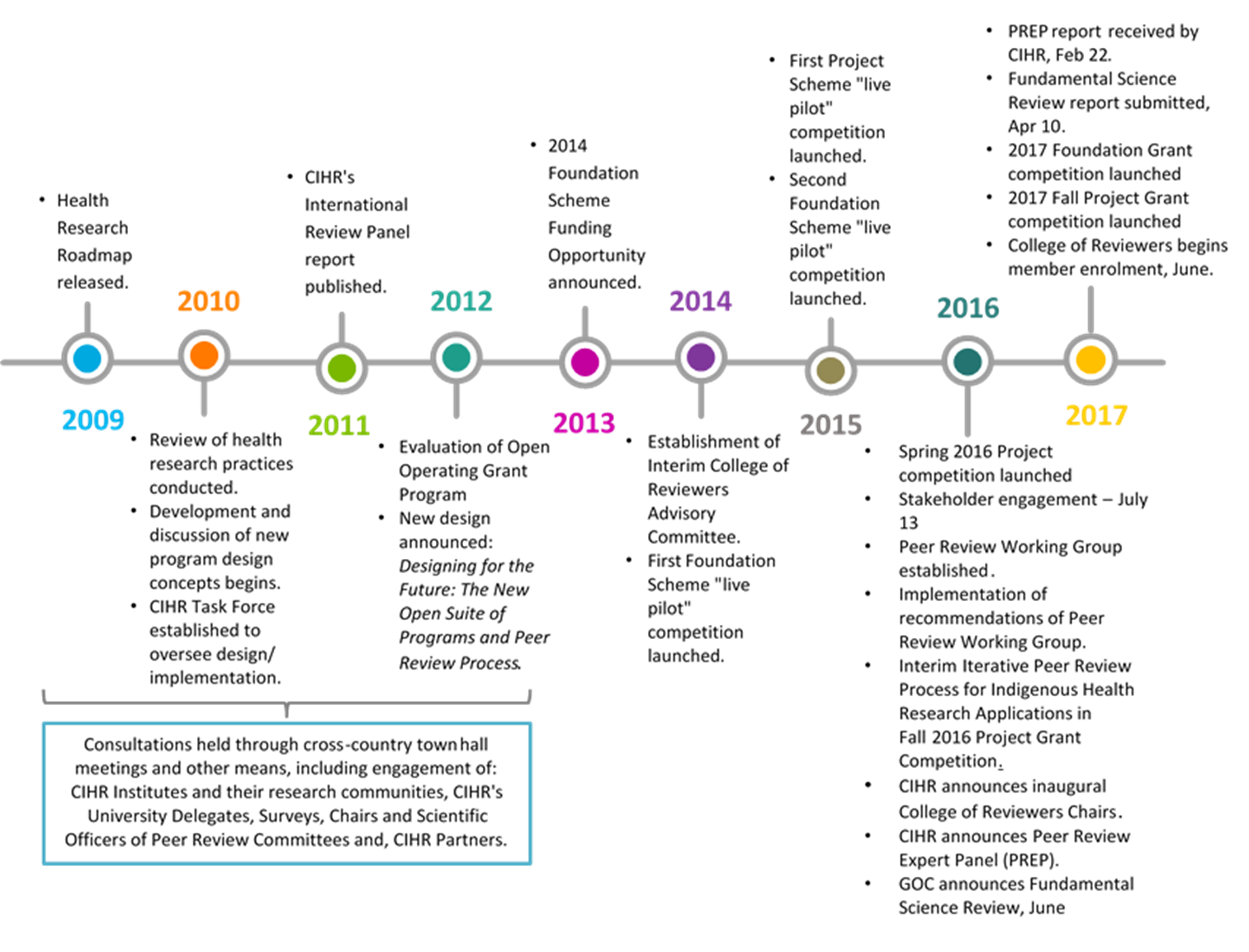

In 2009, CIHR’s Health Research Roadmap introduced a bold vision to reform the peer review and open funding programs. Beginning in 2010, CIHR started the process of reforming its investigator-initiated research programs, including the OOGP, and the related peer review processes (see Figure A: Timeline of CIHR Reforms Process, 2009-2017). These reforms were informed by three main lines of evidence:

- Data from an IPSOS Reid poll of the scientific community in 2010 conducted by CIHR that found strong support from the research community to fix a peer review system that was perceived as ‘lacking quality and consistency';

- A recommendation of CIHR's second International Review Panel in 2011 that ‘CIHR should consider awarding larger grants with longer terms for the leading investigators nationally. It should also consolidate grants committees to reduce their number and give them each a broader remit of scientific review, thereby limiting the load'; and,

- Findings from the 2012 evaluation of CIHR's Open Operating Grant Program (OOGP) which recognized challenges in open funding across pillars of research and supported the need to reduce peer review and applicant burden.

CIHR conducted several rounds of consultations with its stakeholder communities prior to and throughout the reforms process. The earliest consultations, which led to the proposed design, resulted in a number of challenges being identified with CIHR’s existing funding architecture and peer review processes. The resulting re-design was intended to address those challenges, some of which included funding program accessibility and complexity, applicant burden and “churn”, insufficient support for new/early career investigators, unreliability/inconsistency of reviews, and high peer reviewer workload.

CIHR moved to a new open suite of programs, which were piloted and implemented between 2010 and 2016. The majority of its IIR funding was now being awarded through its FGP and PGP. The objectives of the new open suite of programs are provided below.Footnote i However, the reforms, which took place in the context of constrained funding and experienced implementation challenges, were met with mixed reactions from the health research community. During this time, CIHR’s strategic plan was also updated (Health Research Roadmap II – 2014-15 to 2018-19) and continued the implementation of the reforms as part of the strategic direction focused on promoting excellence, creativity and breadth in health research and knowledge translation.

Monitoring and Review of the New Programs

Since 2013, CIHR piloted specific design elements associated with the new programs, including structured applications, remote review, a new rating scale, and a streamlined CV. These pilots were conducted in a ‘live’ manner (i.e., piloted during routine program delivery across several programs), so that CIHR could monitor outcomes in an evidence-informed manner and to safeguard the reliability, consistency, fairness and efficiency of the competition and peer review processes. CIHR intended the implementation of programs to be iterative, drawing on feedback from pilot studies with stakeholders and its own internal reviews. The results from the pilots were analyzed and consolidated into the following reports; however, an overview of the key points is provided below.

- 2014 Foundation Grant “Live Pilot” competition Report

- Fall 2013 Knowledge Synthesis Pilot Report

- Spring 2013 Fellowships Competition Report

In 2015, CIHR commissioned a number of reviews to assess CIHR's internal systems and implementation processes, to allow for adjustments in a timely manner, given the complexity of the pilot projects and new programs.

CIHR’s Internal Audit Unit reviewed the reforms implementation as part of an Internal Audit Consulting Engagement (2016), which was focused on governance and administrative practices linked to project management and internal reorganization in order to deliver the new Foundation and Project Grant programs. The report noted that the reforms implementation project benefited from well-developed planning tools and that the pilots were rolled out on time. The report also indicated that there were opportunities for improvement in the areas of information-sharing, communications, reporting, project planning, and stakeholder engagement, all of which are being addressed by CIHR. The Reforms CRM Project Independent Third-Party Review by Interis Consulting was specifically designed to assess the implementation of the business systems required to support the new program delivery processes. CIHR sought expert advice to provide recommendations concerning implementing complex, transformative business systems. Results indicated there were opportunities for improvements included clarifying roles and responsibilities as well as project schedules and scope.

CIHR accepted the recommendations of the reviews outlined in the CIHR Management Response, released in May 2016, and has taken steps to implement the reports’ recommendations and established new governance committees to monitor scope and timelines for the projects. These two reviews allowed management to receive feedback as the new managerial and business systems were being implemented which allowed for course corrections. To date, CIHR has implemented most of the recommendations and continues to undertake the necessary steps to address project management challenges.

As of mid-2018, a total of four FGP competitions had been launched (in 2014, 2015, 2016 and 2017) the first two of which were labelled as “live-pilots”. The Project Grant Program competition has also had four launches since 2016, the first of which was labelled a “live-pilot”. The competitions were held in Spring 2016, Fall 2016, Fall 2017 and Spring 2018 with no competition launched in Spring 2017. Several enhancements were made to the 2015 FGP “live pilot” competition, informed by the 2014 “live pilot” and related survey data from reviewers, applicants, and Competition Chairs. These enhancements included clarifying adjudication and application criteria and guidelines, limit increases and additions to sections of the Foundation CV and Stage 2 application, changes in sub-criteria weighting, additional reviewer training, and the exploration of benefits and operational requirements to introducing synchronous reviews.

The implementation of the reforms was met with mixed reactions by Canada's health research community and on July 13th, 2016, at the request of the Minister of Health, CIHR hosted a Working Meeting with members of the community. The purpose of this meeting was to review and jointly address concerns raised regarding the peer review processes, particularly associated with the Project Grant Program. The key outcomes of that meeting and requested changes to the 2016 Project Grant competition included the appropriate review of Indigenous applications; adjustments to the number of applications permitted and page limits; adjustments to the Stage 1:Triage (e.g., number of reviews, elimination of asynchronous online discussion, elimination of alpha scoring system) and Stage 2: Face to Face Discussion (e.g., inclusion of highly ranked application and those with large scoring discrepancies, return to face to face panels for 40% of application reviewed at Stage 1); as well as the establishment of a Peer Review Working Group.

The Peer Review Working Group, under the leadership of Dr. Paul Kubes, was established as an outcome of the July 13th, 2016, Working Meeting. The Peer Review Working Group discussed each of the outcomes and made recommendations for action, including: revised eligibility and adjudication criteria; revised roles for Competition Chairs and Scientific Officers; the removal of asynchronous online discussion from Stage 1; reversion to a numeric scoring system; Stage 2 face-to-face-reviews; reviewer training; and, the launch of the pilot observer program in peer review for early career researchers.

In September 2016, CIHR announced an International Peer Review Expert Panel (PREP) to examine the design and adjudication processes of its IIR programs in relation to the CIHR mandate, the changing health sciences landscape, international funding agency practices, and the available literature on peer review. The review was in line with the mandated five yearly cycle of international review of CIHR, but the timing was brought forward due in part to the stakeholder reaction to the implementation of the reforms. The Panel was supported by the Director General, Performance and Accountability Branch and members of the OSP evaluation team given the need for independence of the panel as well as the direct relevance to the panel’s work to the planned OSP evaluation. The Panel presented its report in February 2017 in which it observed that although the basic design objectives and intent of the reforms were appropriate, sound, and evidence-based, there had been implementation failures. These implementation failures included the failure to: effectively pilot the applicant-to-reviewer matching algorithm (which is no longer being used); have the College of Reviewers in place at the outset of the reforms; effectively engage the research community throughout the reforms; and, to maintain the trust and confidence of CIHR's main stakeholders, the research community and Canadians, as represented through politicians. The Panel noted that the implementation challenges coupled with the series of rapid simultaneous changes that CIHR had made to some of its funding programs and Institutes, and the persistent constraint of flat-lined funding for investigator-initiated research in Canada, had resulted in a loss of trust from the CIHR research community and its stakeholders.

In July 2017, CIHR announced that the inclusion of early career investigators (ECIs) in the FGP was inconsistent with the vision of the program and that ECIs would no longer be eligible to apply for a Foundation Grant starting with the 2017-2018 competition. At the same time, in response to feedback from its key constituents and in the context of available funding, CIHR announced a realignment of its funding strategy for the two programs, redirecting $75M from the Foundation Grant envelope of $200M to the Project Grant envelope. Then in November 2017, CIHR struck a Foundation Grant Program Review Committee chaired by Dr. Terry Snutch of the University of British Columbia, to provide recommendations on the program’s objectives, design, application, and peer review processes, presented to CIHR’s Governing Council in November 2018. The recommendations included continuing to support the Foundation Grant Program with modifications including continuing to target mid-senior career researchers; having a single stage/application/review process that is conducted face-to-face; the FGP should represent 25% of CIHR’s IIR budget, that Foundation Grant Program applicants should not be eligible to apply for the Project Grant Program at the same time, and to track data related to pillar gender and visible minorities to address any biases that may arise.

On April 15, 2019, CIHR announced that the Foundation Grant Program would be sunset, and the 2018-19 competition would be the last. The decision was based on a number of consultations (including CIHR’s Scientific Directors, Science Council, and Governing Council), the input of the Foundation Grant Program Review committee (struck in 2017, who recommended significant modifications to the program while preserving it), and a critical review of data including preliminary findings from this evaluation. The review of the data highlighted unintended consequences in the funding distribution within the program (e.g., funding a disproportionate number of applicants who were older/more senior, from larger institutions, and who were conducting Pillar 1 research, as well as inequity for female applicants in Stage 1) that were deemed unacceptable. In addition, CIHR acknowledged that the peer review process did not align with the renewed commitment to face-to-face review and had not reduced reviewer burden as originally envisioned.

Since 2019, there have been a number of key changes at CIHR, notably the development and implementation of CIHR’s 2021-2031 Strategic Plan, which has resulted in a number of actions related to advancing research excellence, building health research capacity, and integrating evidence in health decisions. In addition, CIHR’s Equity Strategy outlines actions to foster equity, diversity and inclusion in the research system.

Program Objectives

The focus in the current evaluation is on the Operating Support Program (OSP), which during the period under review represents CIHR investments in the OOGP, and its successor programs, the FGP and PGP. The OSP is a sub-program of CIHR’s broader Investigator Initiated ProgramFootnote ii. The OSP aims to contribute to a sustainable Canadian health research enterprise by supporting world-class researchers in the conduct of research and its translation across the full spectrum of health.

Open Operating Grant Program

As indicated above, the OOGP was CIHR’s primary mechanism through which investigator-initiated health research was supported, starting prior to the creation of CIHR in the year 2000 (when it was the Medical Research Council of Canada) up until 2014-15. The specific objectives of the OOGP were to:

- Support original and high-quality projects or teams/programs of research;

- Support individual researchers and groups of researchers;

- Support research in all areas and disciplines with relevance to health;

- Contribute to the creation and use of health-related knowledge;

- Contribute to the dissemination, commercialization/knowledge translation, and use of health-related knowledge; and

- Develop and maintain Canadian health research capacity, including research training.

As a result of the reforms, two programs were created: the FGP, to provide long-term support for the pursuit of innovative, high-impact programs of research; and, the PGP, to support projects with a specific purpose and defined endpoint. The objectives of the OOGP were to be encompassed in the objectives of the new programs.

The total number of OOGP grants awarded in competition years between 2000-01 and 2015-2016 was 13,331 across all four pillars (Pillar 1 - 72%, Pillar 2 - 12%, Pillar 3 – 6%, Pillar 4 8.5%). Almost three-quarters were awarded to male NPIs (72%) and just over one quarter (28%) were awarded to female NPIs.

Foundation Grant Program

The FGP (one competition per year) was designed to contribute to a sustainable foundation of health research leaders by providing long-term support for the pursuit of innovative, high-impact programs of research.

The program is expected to:

- Support a broad base of researcher leaders across career stages, areas, and disciplines relevant to health;

- Develop and maintain Canadian capacity in research and other health-related fields;

- Provide research leaders with the flexibility to pursue new, innovative lines of inquiry; and,

- Contribute to the creation and use of health-related knowledge through a wide range of research and/or knowledge translation activities, including any relevant collaboration.

Project Grant Program

The PGP (two competitions per year) was designed to capture ideas with the greatest potential for important advances in health-related knowledge, the health care system, and/or health outcomes, by supporting projects with a specific purpose and defined endpointFootnote iii.

The Project Grant Program is expected to:Footnote iv

- Support a diverse portfolio of health-related research and knowledge translation projects at any stage, from discovery to application, including commercialization;

- Promote relevant collaborations across disciplines, professions, and sectors; and,

- Contribute to the creation and use of health-related knowledge.

Program Expenditures, Application and Success Rates

For the period from 2011-12 to 2017-18, grant expenditures for the OSP comprised OOGP grants until the introduction of FGP grants in 2015-16 and PGP grants in 2016-17, although any OOGP grants still in progress continue to have expenditures. Grant funds have increased steadily from $434M in 2011-12 to $539M in 2017-18 (Table 1: Operating Support Program Expenditures (2011-12 to 2017-18) in Millions). It should be noted that over the time of the evaluation, the majority of grants have been funded through the OOGP (82.5%), followed by the FGP (10.6%) and the PGP (6.9%). OSP has accounted for between 48% and 52% of CIHR’s total annual grants and awards expenditures between 2011-12 and 2017-18. OOGP accounted for 43-54% between 2000-01 and 2010-11 as noted in the previous evaluation.

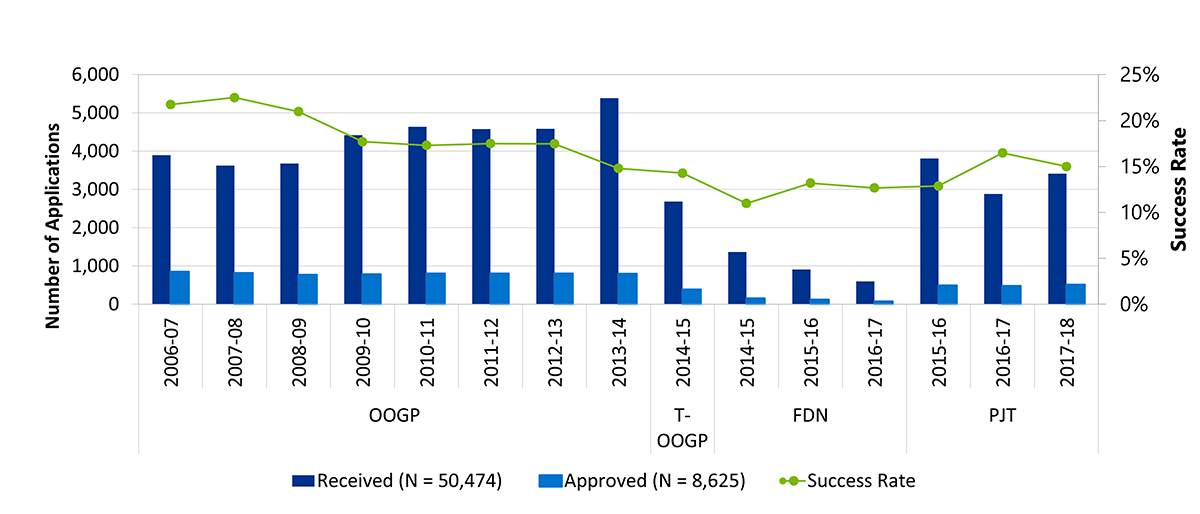

The application pressure for the OOGP was relatively consistent until 2012-13, increasing for its last fiscal year before the launch of the new programs. Success rates for the OOGP started decreasing in 2009-10 and continued to do, likely due to the flat-lining budget between 2008 and 2013, as noted by the PREP. Applications to the PGP competitions far outnumber those to the FGP competitions and the success rates for the former appear to be slightly higher than for the latter (Figure B: Application pressure and success rates across OOGP, Foundation and Project Grant programs, 2006-07 to 2017-18).

Description of Evaluation

The evaluation of CIHR’s OSP, for the period from 2011-12 to 2017-18, focused on the performance of the former OOGP as well as the relevance and design and delivery of the FGP and PGP, which replaced the OOGP. In 2011-2012, the Evaluation Unit at CIHR conducted an evaluation of the relevance and performance of the Open Operating Grant Program (OOGP) from 2000-01 to 2010-11.

Evaluation Scope and Objectives

CIHR’s evaluation of its OSP, included in CIHR’s 2017-18 Evaluation Plan, was designed to meet the Tri-Agencies’ requirements to the Treasury Board of Canada Secretariat (TBS) under the Policy on Results (2016) by addressing the core issues of performance, efficiency, relevance, and design and delivery. In addition, the evaluation was intended to provide senior management with independent, objective, and actionable evidence about the impacts of research funded through the OOGP and the effectiveness of the design and delivery of the FGP and PGP. Findings from the evaluation have informed discussions and decisions about the OSP, particularly the FGP, throughout the evaluation.

The evaluation covers the OOGP, FGP, and PGP as part of the OSP from 2011-12 to 2017-18. Similar to the approach taken in the 2012 evaluation of the OOGP, the scope excludes funding through priority-driven mechanisms. The evaluation meets the requirements of the Treasury Board of Canada’s Secretariat (TBS) under the Policy on Results and the Financial Administration Act.

It is important to note that the evaluation was completed in fiscal year 2019-20, with the approval and web posting of this report as well as the development of the management action plan delayed due to the Covid-19 pandemic. It should also be acknowledged that a number of important changes have taken place at CIHR since the completion of this report, most notably the implementation of the CIHR’s 2021-2031 Strategic Plan which has resulted in a number of key actions related to advancing research excellence, building health research capacity, and integrating evidence in health decisions.

Previous Evaluation of the OOGP

The OOGP was evaluated previously in 2012. Broadly, the evaluation found that the program met key objectives, contributed to the creation and dissemination of health-related knowledge, and supported high quality research. Considering the forthcoming program and peer review changes with the reforms, recommendations included:

- ensuring future open program designs utilized peer reviewer and applicant time efficiently;

- that designs account for varying application, peer review and renewal behaviours across Pillars;

- that further analyses are conducted on changes to the peer review system to fully understand potential impacts; and

- creating measures of success for future open programs, ensuring they are relevant for CIHR’s different health research communities.

The Management Response Action Plan (MRAP), included in the report, showed agreement with all recommendations, most of which were addressed throughout the implementation of the reforms.

Factors Affecting the Current Evaluation

Several factors had an impact on the current evaluation. Most importantly, there was a major program shift from the OOGP (which was CIHR’s and previously the Medical Research Council’s primary mechanism for supporting investigator-initiated research for decades) to the FGP and PGP. In 2009, CIHR launched a five-year strategic plan, Health Research Roadmap, introducing a vision to reform the peer review and open funding programs. Beginning in 2010, CIHR started the process of reforming its IIR programs, including the OOGP, and the related peer review processes, to address the full scope of its mandate while also reducing the burden on peer reviewers and applicants. CIHR intended the implementation of the changes to be iterative, drawing on consultations with stakeholders, pilot studies, and its own internal reviews. The new suite of programs, which replaced the OOGP, were piloted and implemented over the 2010-2017 periodFootnote v. However, the reforms, which took place in the context of constrained funding and experienced implementation challenges, were met with mixed reactions from the health research community. CIHR’s strategic plan was also updated during the implementation of the reforms (Health Research Roadmap II – 2014-15 to 2018-19). Despite the evolving nature of these programs, the evaluation proceeded in order to meet TBS requirements under the Policy on Results. In 2016, CIHR announced the PREP, which was supported directly by the OSP evaluation team. Then in 2019, CIHR began the process to develop its next strategic plan and made the decision to sunset the FGP. Additional details are provided below.

Evaluation Questions

The following evaluation questions were developed to support the evaluation objectives and were informed by consultations with the Executive Management Committee (in its capacity as CIHR’s Performance Measurement and Evaluation Committee), various Directors General from CIHR Branches (Finance; Research, Knowledge Translation and Ethics; Performance and Accountability), and the Subcommittee on Implementation and Oversight (SCIO).

Relevance: Operating Support Program

- How does the Operating Support Program align with CIHR and the Government of Canada’s roles and responsibilities in investigator-initiated health research?

Performance: Impact Assessment of OOGPFootnote vi

- What are the outcomes and impacts of CIHR investments in the Operating Support Program through the OOGP related to:

- Advancing health-related knowledge through the production and use of research

- Building Canadian health research capacity

- Informing decision making through the dissemination of health-related knowledge generated from OOGP supported research

- Health impacts generated from OOGP supported research

- Broad socio-economic impacts generated from OOGP supported research

Design and Delivery: Implementation of Foundation and Project Grant Programs, Costing Associated with the Operating Support Program

- Have the programs been designed and delivered to achieve expected outcomes?

- Is CIHR’s Operating Support Program being delivered in a cost-efficient manner?

Methodology

Consistent with TBS guidelines and recognized best practices in evaluation, a range of methods and data sources were used to triangulate the evaluation findings (document and data review; end of grant reports, n = 3304; case studies, n = 8; bibliometric analyses).

The design for the current evaluation was developed in response to the information needs of senior management at a particular point in time, given the stage of implementation of the new suite of programs. It was conducted at a time when additional monitoring and consultative activities were taking place, including the collection of data to inform program implementation. Given the amount of data being collected for the implementation of the new suite of programs, the extensive consultations conducted during the reforms, and decisions by senior management at CIHR, the design specifically incorporated the majority of these activities as lines of evidence (e.g., PREP), while purposefully limiting the use of additional primary data collection measures with the research community (e.g., interviews, surveys).

Given the early stages and continued modification of the FGP and PGP, the focus, for the assessment of performance, was on the OOGP. Specifically, the evaluation examined the outcomes and impacts of OOGP related to advancing knowledge, building Canadian health research capacity, informing decision-making, health impacts, and broad socio-economic impacts. The creation, dissemination, knowledge translation, and use of health-related knowledge were specific objectives of the OOGP. Similarly, the creation and use of health-related knowledge is an objective for both the FGP and PGP. Therefore, lessons learned from the performance assessment of OOGP were expected to be applicable to the successor programs. Furthermore, the Canadian Academy of Health Sciences (CAHS) Impact Framework [ PDF (2.4 MB) - external link ] (CAHS, 2009) was used to guide the analysis of outcomes and impacts from the OOGP. Data from end of grant reports were disaggregated by pillar, sex, career stage, and language, with comparative analyses undertaken when sample sizes were large enough. Similarly, when possible and appropriate, comparisons were made to findings from the Evaluation of OOGP (2012). Additional methodological details can be found in Appendix B - Methodology.

Limitations

The following limitations should be noted:

- A performance evaluation of the FGP and PGP components of the OSP (beyond bibliometric data) was not possible given their stage of implementation and ongoing modifications. Therefore, findings from the performance evaluation of the OOGP was intended to inform the current and future programs.

- Attributing outcomes and impacts of grants solely to OOGP funding was not possible given that researchers have additional sources of funding and support, as well as additional possible confounding variables (e.g., field of research), therefore results are presented as contributions.

- Performance results are based largely on existing and available self-report data and, as such, are subject to potential biases and recall issues. The sample size for end of grant reports was reasonable; however, generalizability of some data may be limited. Additionally, there are concerns with the reliability of the end of grant data due to variations in the level of completeness as well as the structure of the questions and length of the report. Lastly, given the timeframe within which the end of grant report is administered (~18 months post grant expiry) it is possible that longer term impacts are not fully captured; however, few researchers reported these outcomes would occur in the future and the case studies found that longer term outcomes were not realized.

- Although a variety of data inputs were used, much of it was secondary data collected for different purposes, generated at different points in time, by different sources. These included the considerable data collected and analyses done on the FGP and PGP (e.g., pilot and quality assurance studies), the recommendations of the Peer Review Working Group and PREP (2017), and the end of grant report data (2011-2016).

Evaluation Findings

Relevance: Continued Need for OSP and Alignment with CIHR Act

Key Findings

- The OSP addressed a continued need for investigator-initiated research and was aligned with Government of Canada priorities outlined in Canada’s Science Vision, the Federal Budgets, and the Fundamental Science Review.

- Broadly, the OSP has contributed to fulfilling the objectives of the CIHR Act and the priorities of the strategic plan (Roadmap II) in place during the period under review.

- The OSP is closely aligned with CIHR’s roles and responsibilities.

The OSP was closely aligned with CIHR’s role, responsibilities, and priorities

The program contributes to:

- the fulfillment of the CIHR Act and mandate;

- Strategic Direction 1 of Roadmap II: Promoting Excellence, Creativity and Breadth in Health Research and Knowledge Translation; and

- the three results areas in CIHR’s Departmental Results Report (Canada’s health research is internationally competitive, Canada’s health research capacity is strengthened, and Canada’ health research is used).

CIHR's mandate, is "to excel, according to internationally accepted standards of scientific excellence, in the creation of new knowledge and its translation into improved health for Canadians, more effective health services and products and a strengthened Canadian health care system". CIHR’s vision is to position Canada as a world leader in the creation and use of health knowledge that benefits Canadians and the global community.

The OSP contributes to the achievement of this overarching mandate and vision by attracting and funding health research excellence since 2000 (through the OOGP, FGP, and PGP), and outperforms benchmark comparators (e.g., health researchers in OECD countries). In addition, the OOGP has facilitated the creation, dissemination and use of health-related knowledge (more so within academia), as well as the development and maintenance of Canadian health research capacity by supporting original, high-quality projects proposed and conducted by individual researchers or groups of researchers in all areas of health research, as evidenced in the current evaluation. Relevance was also strongly determined in CIHR’s 2012 Evaluation of the Open Operating Grant Program [ PDF (3.3 MB) ].

Given that many of the OOGP objectives are encompassed within the objectives of the FGP and PGP, similar results would be expected from these two programs as were observed from the OOGP. However, there are some differences in the structure of these two new programs. Broadly, the objectives of both the FGP and PGP together are generally aligned with the CIHR Act and mandate; however, the FGP did not update its objectives to reflect the ineligibility of early career researchers and the PGP does not explicitly mention the development and maintenance of Canadian health research capacity in its objectives. Although it is expected that highly qualified personnel (HQP) would be involved in and trained through PGP grants, given that health research training is a priority for CIHR, this role should be explicitly recognized in the program’s objectives. In addition, it should be noted that the focus in the current evaluation was on the design and delivery of the new programs and therefore no assessment of progress towards objectives has been undertaken. This should be a focus of future evaluations of IIR research in general and the PGP in particular.

The OSP addressed a continued need and was aligned with Government of Canada priorities

The continued need for the investigator-initiated research, and its alignment with Government of Canada priorities, is reinforced by:

- Canada’s Science Vision;

- the Fundamental Science Review [ PDF (7.8 MB) - external link ]; and

- the Federal Budget 2018; and,

- the Federal Budget 2019.

The value of IIR research is affirmed in Canada's Science Vision. Specific objectives include making science more collaborative through increased support for research through the granting councils and other support for research and research infrastructure, fostering the next generation of scientists, and promoting equity and diversity in research.

In addition, the Fundamental Science Review [ PDF (7.8 MB) - external link ](led by Dr. Naylor) recommended that the Government of Canada should rapidly increase its investment in independent investigator-led research to redress the imbalance caused by differential investments favouring priority-driven research over the past decade.

The objectives and recommendations described above were reflected in Federal Budget (2018). Budget 2018 affirmed the government's commitment to supporting research and the next generation of scientists with a historic investment of nearly $4 billion over five years, with nearly $1.7 billion going to the granting councils to increase support and training opportunities for researchers, students, and other HQP. Budget 2019 builds on these investments in research excellence in Canada through additional investments in science, research and technology organizations and establishing a new Strategic Science Fund.

The OSP aligns with these priorities given that it provides grant funding to researchers to conduct research in any area related to health aimed at the discovery and application of knowledge. More specifically, its programs (OOGP, FGP, PGP) aim to successfully facilitate the creation, dissemination and use of health-related knowledge, as well as the development and maintenance of Canadian health research capacity by supporting original, high quality, and innovative projects proposed and conducted by individual researchers or groups of researchers in all areas of health research. The PGP also aims to promote collaboration across disciplines, professions and sectors.

Performance: Impact Assessment of the OOGP

Key Findings

- OOGP funding across pillars (the majority of OOGP grants are Pillar 1 Biomedical) has successfully facilitated the creation, dissemination and use of health-related knowledge (mainly within academia), as well as enhanced Canadian health research capacity.

- The OSP has attracted and funded research excellence. OOGP-funded researchers and FGP and PGP applicants were more productive and had greater impact than health researchers in Canada and other OECD countries.

- The production of OOGP research outputs per grant increased since the 2012 evaluation; over half of the grants resulted in outcomes related to advancing knowledge beyond productivity and involved other researchers in KT activities.

- Greater research productivity (publications and conference presentations) was observed for Pillar 1 grants, as well as grants with male NPI’s.

- Grant duration and grant amount were both strong predictors of journal article productivity, although duration was a stronger predictor. Sex was also found to be a predictor of productivity (although not as strong).

- OOGP funding across pillars (the majority of OOGP grants are Biomedical) has contributed to building Canadian health research capacity through the involvement of research staff and trainees.

- The average number of research staff and trainees attracted to and trained by OOGP grants increased, on average, from 9 to 14 per grant.

- Pillar 1 grants and grants with male NPI’s involved more research staff and trainees.

- OOGP funded research results demonstrated limited translation of knowledge beyond academia (e.g., to other researchers and study stakeholders), longer-term health impacts, and socio-economic impacts.

- Less than half of OOGP grants reported involving and impacting stakeholders beyond other researchers and study stakeholders. Pillar 2 through 4 grants were more likely to impact health practitioners.

OOGP funded research has resulted in advances in knowledge

The outcomes and impacts of investments in the OOGP in terms of advancing health-related knowledge through the production and use of research was examined through an analysis of end of grant reports, case studies, and bibliometric analyses. Recall that the CAHS Impact Framework was used to guide the analysis of outcomes and impacts from the OOGP. CAHS defines advancing knowledge as new discoveries and breakthroughs from health research, and contributions to the scientific literature. It includes measures of research quality, research activity (volume), outreach to other researchers, and structural measures (the research fields in which an organization is active and how it balances its portfolio of different research fields).

The best researchers were selected for OOGP funding

Bibliometric analysis is one frequently used approach to measuring knowledge creation, and is seen as an objective, reliable and cost-effective way to measure peer-reviewed research outputs (Campbell et al., 2010). Academic papers published in widely circulated journals facilitate access to the latest scientific discoveries and advances and are seen as some of the most tangible outcomes of academic research (Godin, 2012; Larivière et al., 2006; Moed, 2012; Thonon et al., 2015). More specifically, bibliometric analysis employs quantitative analysis to measure patterns of scientific publication and citation, typically focusing on journal papers, to assess the impact of research (Ismail, Farrands, & Wooding, 2009). In this evaluation, the Average of Relative Citations (ARC) and the Average Relative Impact Factor (ARIF) are used as measures of scientific impactFootnote vii.

The limitations of bibliometric analysis include difficulties estimating publication quality based on citations, differences in citation practices across disciplines and sometimes between sub-fields in the same discipline, as well as the difficulty moving beyond contribution to attribution (Ismail, Nason, Marjanovic, & Grant, 2012). CIHR has also recently signed the San Francisco Declaration on Research Assessment (DORA), which recognizes the need to improve the ways in which the outputs of scholarly research are assessed. Consistent with best practice, the triangulation of bibliometric analysis with other lines of evidence are also used in this evaluation to assess knowledge creation as a result of the program. Analysis of end of grant report information as well as case studies were used to assess highly impactful research resulting from OOGP funding. It should also be noted that the bibliometric analyses in this report are based on data for publications produced by OOGP researchers during the time they are supported by these grants. While this method is commonly accepted based on an assumption that these grants are a significant contribution to research output (e.g., Campbell et al, 2010; Ebadi & Schiffauerova, 2015), a direct attribution between grant and publication bibliometric data cannot be made.

An analysis of the journal publications and associated citations (up to and including 2016) of a sample of funded (n = 2,500) and unfunded (n = 500) applicants from OOGP competitions from 2000 to 2014 was undertaken. Overall, results demonstrated that the selection process used in OOGP competitions allows for the selection of the best applicants. Funded applicants produced, on average, 0.6 more papers with greater impact. Specifically, both the ARC and ARIF scores for funded applicants were higher than for unfunded applicants during the two years prior to the competitions (1.60 vs. 1.34 and 1.39 vs. 1.21, respectively). However, it should be noted that these differences, while statistically significant, are small.

Researchers were more productive when supported by OOGP funding

For the studied period (2001-2015), funded applicants published, on average, slightly more than four papers annually compared to 2.6 when they were not funded. The ARIF of supported papersFootnote viii improved with time and the ARIF value of supported papers for the 2000-2016 period (1.39) is above that of unsupported papers (1.30). However, despite a statistically significant difference (p < 0.001), supported publications do not have a practically greater ARC score (1.57) than the unsupported ones (1.55). The ARC in the current evaluation is slightly higher than the ARC observed in the previous evaluation, which increased significantly between 2001-2005 and 2006-2009 (1.44 vs. 1.54, p < 0.001).

In addition, post OOGP funding, funded applicants were also more productive (with an average of 0.6 more papers than unfunded applicants) and had greater impact – publishing papers that are getting more citations and publishing in journals that are cited more often than unfunded applicants. Funded applicants had greater ARC and ARIF scores than those who were unfunded (1.61 vs. 1.35 and 1.40 vs. 1.21, respectively); although statistically significant, this difference is relatively small. There is a global improvement in the scientific performance of funded researchers over time. However, the gap between funded and unfunded applicants widens over the period since papers stemming from funded applicants increase their scientific impact (ARIF and ARC) more markedly than the papers stemming from unfunded applicants.

Overall, OOGP funding is positively correlated with scientific productivity and impact. Recall that the data shows that supported researchers were more productive and had better impact scores than applicants who were unfunded in OOGP competitions. In addition, this study also demonstrated that the duration of OOGP funding is also positively correlated with productivity. As one might expect, on average, senior researchers (more than 10 years of experience) produce more papers than early (5 years and less of experience) and mid-career researchers (between 6 and 10 years of experience). However, in terms of impact, there is no difference in ARIF scores across career stages while early career researchers have higher ARC scores compared to mid and senior-career researchers. This finding is likely due in part to the fact that the ARC in the current study includes self-citations and it supports the assertion that early career researchers tend to cite newer and younger literature (Gingras, Larivière, Macaluso, & Robitaille, 2008).

Subgroup analysis by sex shows that male researchers were slightly more productive than female researchers (average of 0.7 more papers annually) and had greater impact scores (ARC = 1.62 vs. 1.48; ARIF = 1.41 vs. 1.34, respectively). This finding is consistent with the literature showing that men tend to have greater bibliometric research productivity and impact outcomes compared to women (Larivière, Ni, Gingras, Cronin, & Sugimoto, 2013).

Subgroup analysis by preferred language shows that among researchers whose preferred language is French, only 2.16% of their publications are in French. It should be noted that almost all papers indexed in the Clarivate Analytics Web of Science database used for the bibliometric analyses are in English and therefore language results should be interpreted with caution.

OOGP-funded researchers had greater scientific impact than other health researchers

OOGP-funded researchers not only show better impact in the health sciences than the Canadian average (ARC = 1.61 vs. 1.34; ARIF = 1.39 vs. 1.18, respectively), but also than the best performing OECD countries in this domain (ARC ranges from 1.20 to 1.57; ARIF ranges from 1.07 to 1.28). This finding is consistent with that found in the previous evaluation of the OOGP (CIHR, 2012).

OOGP research outputs increased since the previous evaluation

In addition to the results of the bibliometric analyses relating to research quality, end of grant reports include measures related to research activity such as the number of knowledge products produced as a result of an OOGP grant (i.e., journal publications, conference presentations, books/book chapters, and technical reports). End of grant data, provided via self-report from grant NPIs, is currently collected by CIHR for all operating grants, typically within 18 months of grant completion (see Appendix B - Methodology for additional details on end of grant reports and methods of analysis). These data should be interpreted with some caution given that they are self-report, represent 29% of grants awarded at that time, and the fact that simply producing these knowledge products gives no indication of quality, use, or translation. However, when considered alongside bibliometric analyses, this measure provides useful data on the outputs that result from this investigator-initiated program, as well as some insight into the publishing behaviours of the different parts of CIHR's health research community funded through the OOGP.

The most reported knowledge products produced from grants are published journal articles and conference presentations. In general, and as expected, the production of other types of knowledge products was quite low (e.g., 40% of grants produced books/book chapters, 10% produced technical reports). The range in number of each type of scientific output varied considerably across all types (Table 2: Knowledge Products, Grant Duration and Amount by Pillar).

Almost all (95%) OOGP-funded researchers publish journal articles, with an average number of 10.62 per grant. This is an increase from the average of 7.6 papers per grant reported in the previous evaluation (CIHR, 2012). It is not entirely clear why the number of articles has increased; however, it may be attributable to an overall increase in journal productivity observed globally (Bornmann & Mutz, 2015; Monroy & Diaz, 2018). Additionally, the majority of OOGP grants resulted in invited conference presentations (88%, average of 13 per grant), and over half resulted in other presentations (59%).

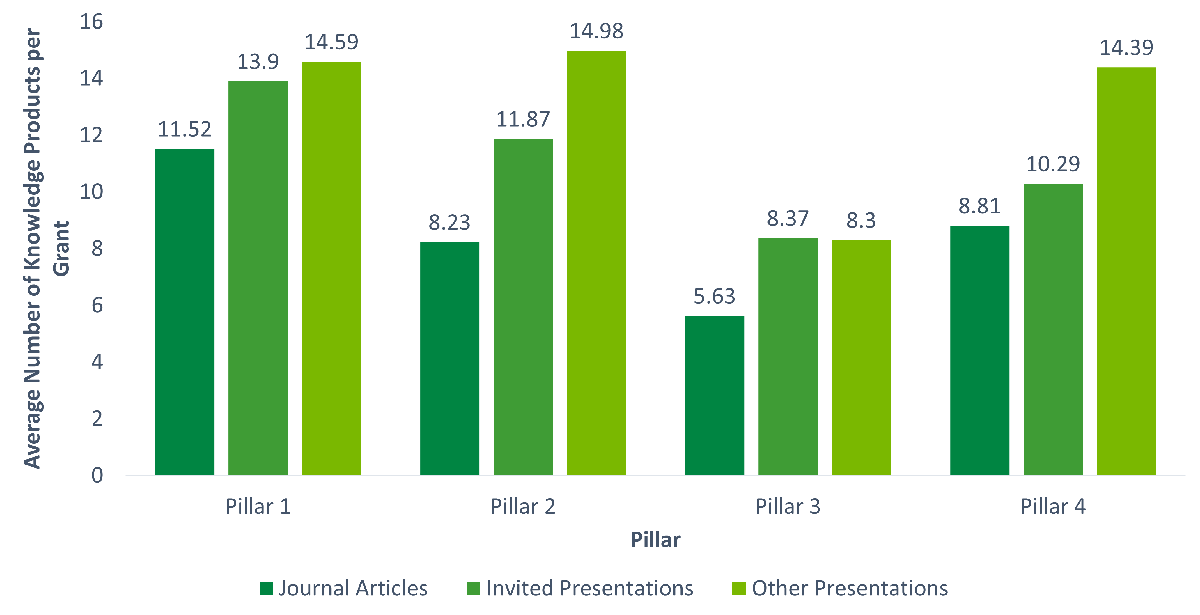

Pillar 1 grants and grants with male NPIs produced more journal articles and conference presentations

Additional analyses show that there are significant differences in journal article production and conference presentations (invited and all other) across pillars (p < .001). Pillar 1 grants produced more journal articles (M = 11.52) compared to all other pillars (Pillar 2 - M = 8.23, Pillar 3 - M = 5.63, Pillar 4 - M = 8.81, with no significant differences among Pillars 2-4; see Figure C: Average Number of Publications and Presentations by Pillar). It should be noted that the average number of publications increased for all pillars from the previous evaluation, by approximately 2-3 additional papers on average. Pillar 1 grants were also associated with a higher number of invited presentations than Pillars 3 and 4 (with no difference from Pillar 2). In terms of all other conference presentations, Pillar 3 was significantly lower than all other pillars.

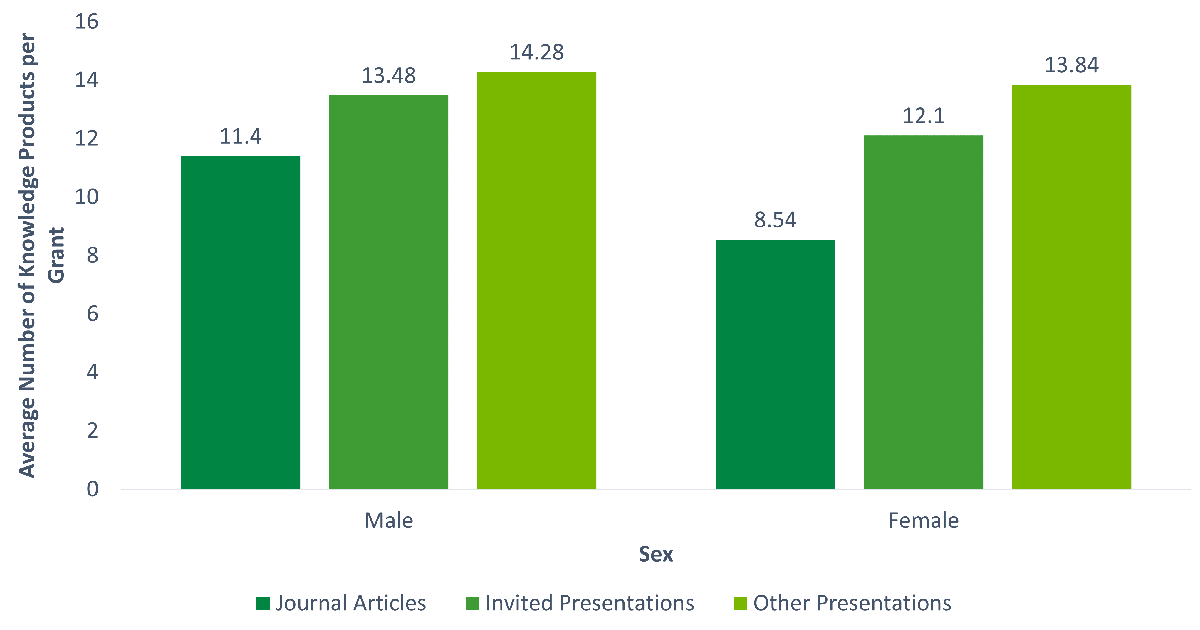

Additional analyses show that there are significant differences in journal article production and the number of invited conference presentations across sex as well (p < 0.001), while there was no difference for all other conference presentations (p < 0.04). Grants with male NPIs produced a higher number of published journal articles and conference presentations than grants with female NPIs (see Figure D: Average Number of Publications and Presentations by Sex).

Unsurprisingly, there is an observable increase in the average number of published journal articles and invited presentations as the career stage of NPI’s on grants increases from early (M = 9, SD = 9), to mid (M = 10, SD = 11), to senior (M = 12, SD = 13). This finding, for publications, is consistent with the bibliometric results above. Overall, there were no observable differences in knowledge products based on the preferred language of the NPI.

Grant duration and amount were significant predictors of journal article productivity, followed by sex

Consistent with the previous evaluation, journal article production is moderately correlated with the amount (value) and duration of the grants awarded (r = 0.36, n = 3,134, p = 0.01 for both independent variables; see Table 2: Knowledge Products, Grant Duration and Amount by Pillar). Additionally, the amount and duration of grants are strongly correlated with each other longer grants tend to have more money (r = 0.67, n = 3,304, p < 0.01). Therefore, it seems as if the duration as well as amount of a grant has an important relationship with the number of publications produced.

Grant duration and amount differ across the four pillars: Biomedical researchers have the longest grant durations on average (4.3 years) compared with the other three pillars (3.4, 2.7, and 3.1 years for Pillars 2 through 4 respectively (see Table 2: Knowledge Products, Grant Duration and Amount by Pillar for additional data)Footnote ix. These differences are statistically significant (one-way ANOVA, p < 0.001), similar to the previous evaluation. Grant duration and amount also differ by sex: on average, male NPIs hold significantly longer grants (M = 4.1 years, SD = 13.22, n = 2,375, p < 0.001) compared to female NPIs (M = 3.7 years, SD = 13.42, n = 925). Similarly, male NPIs receive significantly larger amounts of funding than female NPIs (M = $527,436, SD = 268,967, n = 2,375; M = $452,985, SD = 291,672, n = 2,925, p < 0.001), representing an average gap of $74,451 (see Table 3: Knowledge Products, Grant Duration and Amount by Sex). Given these differences, further analyses were conducted to investigate whether the difference in published journal articles by pillar or sex could be attributable to an overlap with duration and amount, as opposed to other distinct differences among pillars or sex.

A moderated regression model was used to determine whether the predictive relationship of grant duration and amount on the number of published journal articles was influenced by pillar. The regression model confirmed that grant duration was found to be a strong predictor of the production of journal articles (p < 0.001), as was amount (p < 0.001). However, beyond this, pillar was neither a significant predictor nor moderator of articles published. Interestingly, although duration and amount are related (r = 0.66) (i.e., longer grants tend to have more money), differences in productivity are largely due to the duration of the grant, followed by amount (which accounts for some variance independent of duration), while pillar has a negligible effect. This suggests that the differences in productivity observed among pillars are largely a function of duration and/or amount rather than pillar itself.

Similarly, a moderated regression model showed that in addition to grant duration and amount, sex was also a significant predictor of the number of published journal articles (p = 0.04 for sex). In other words, male NPIs who have longer grants with more money are likely to produce a greater number of journal articles. Like the model above with pillar, sex was not as strong a predictor of productivity as grant duration or amount.

The significance of this analysis from an evaluation perspective is that it shows how assessing productivity by simply counting publications can be misleading. Given these findings, as well as requirements in the current Policy on Results, future evaluations and performance measurement of the OSP program should take into account variables that may have an influence on productivity. In addition, these findings should be considered in the future design and implementation of investigator-initiated programs. Areas for future research include understanding optimal grant durations and amounts (i.e., identifying when there are diminishing returns in productivity based on length of grant) with investigator-initiated funding where duration and amount are largely proposed by the researchers themselves.

Almost half of grants resulted in outcomes related to advancing knowledge beyond productivity

NPIs were asked about the production of a variety of other outcomes on their end of grant reports, some of which correspond with the CAHS category of advancing knowledge, such as: research method, theory, replication of research findings, and/or tool, technique, instrument or procedure. Specifically, they were asked whether the grant had resulted in an advanced or newly developed outcome, or whether it will result in a future outcome. Just under 50% of the grants (n = 3,304) resulted in these additional outcomes related to advancing knowledge. Almost half of the grants resulted in an advanced research method (44%), theory (49%) and/or replication of research findings (41%). Almost one third (30%) resulted in an advanced tool, technique, instrument or procedure.

NPIs indicated that advanced outcomes related to advancing knowledge were more likely to result from the grants right away (or within 18 months of grant completion, at the time they completed end of grant reports) rather than newly developed outcomes or outcomes that may occur in the future. As such, fewer newly developed outcomes had resulted from OOGP grants (9-30%, depending on specific outcome type) and an even smaller portion of NPIs indicated these potential outcomes may occur in the future as a result of the grant (7-12%). It is not entirely clear why there is such a wide range in responses; however, there are several outcomes related to advancing knowledge measured in the end of grant report, some of which just simply may not be as applicable for all (e.g., theory). Additionally, the end of grant reports include multiple measures of the same constructs (i.e., advanced, newly developed, may in the future) which may also contribute to increased variation in responses.

Biomedical grants as well as those with male NPIs accounted for more of the advancing knowledge outcomes compared to grants from the three other pillars. Given the low proportions here, further analyses by pillar and sex are not reported.

Over half of grants involved other researchers in end of grant knowledge translation activities

Another area identified in the CAHS framework relating to advancing knowledge is outreach to other researchers. End of grant reports asked NPIs to report on their engagement with, impact on, and involvement of a variety of stakeholders including other researchers and academics (i.e., those not formally listed on their grant applications). In the context of this evaluation, this is considered to be an indicator of outreach to other researchers.

The majority of NPIs (87%) reported that other researchers and academics were aware of the findings resulting from their grants. There were observable differences by pillar, with considerably fewer Pillar 3 and 4 grants indicating other researchers and academics were aware of their results (62% and 37%, respectively). This would suggest that NPIs on these grants tend to reach out to other researchers less frequently than NPIs on Pillar 1 and 4 grants.

NPIs were also asked the extent to which their grant had an impact on various stakeholder groups including other researchers/academics (those not included in the formal grant application). On average, NPIs felt their grant influenced other researchers to some extent (M = 3.37 out of 4, SD = 0.75, n = 3,303). There were no observable differences by pillar or sex.

Lastly, NPIs were also asked to indicate which phases of the research process related to their grant that other researcher/academics were involved in. This included overall involvement and involvement at every stage of the research process. Over half (57%, n = 1,891) of the total 3,304 grants were identified as having other researchers/academics involved in the research process overall. With respect to individual stages of the grant-related research process, the stage identified as having the highest involvement of other researchers/academics (of those grants identified as having involvement at some level) was End of Grant Knowledge Translation Activities (57%, n = 1,073), followed by Development of the Protocol (48%, n = 604). Additionally, of those who responded that other researchers/academics were involved (to some extent), 42% (n = 793) indicated that these stakeholders were involved in Data Collection/Project Implementation, with Interpretation of Results and Development of the Research Question cited as the stages with lowest involvement from other researchers/academics, at 36% (n = 671) and 35% (n= 669) respectively.

In addition to analyzing the end of grant report data, eight case studies of high impact grants were conducted (see Appendix B - Methodology for additional information on case selection and methods) providing additional insights into the outcomes and impacts of OOGP grants. Each case greatly advanced knowledge in its respective research area(s), which is to be expected given that high impact cases were selected. The researchers had successfully carried out their planned research programs, although with some adjustments that emerged from results along the way or from changes in collaborations. In general, the advances had proceeded in incremental steps through the execution of long-term research programs involving clusters of interrelated studies and analyses.

Case study researchers and trainees were highly productive and shared knowledge advances extensively. Researchers chose an important health research problem with great potential for health impact, which could therefore advance knowledge in a highly competitive domain. The research programs started from a long-term, high-level vision for the direction the research could take over a period of decades, and the NPI and team persisted in executing that vision. The research programs used longitudinal research designs and/or developed large, high-quality databases. Knowledge advances were shared via national and international collaborations, presentations, and publications, among other media.

Collaborations among investigators contributed to the intellectual development of the main research ideas. In the Pillar 1 (Biomedical) and 2 (Clinical) cases, collaborations between basic and clinical scientists were particularly impactful in advancing the research. The NPIs adopted and maintained high standards of methodological excellence in research, training, and choice of collaborators. Researchers stayed on top of developments at the forefront of the field, showing flexibility and adaptability to integrate emerging advances from elsewhere. Long term, stable funding from CIHR and many other sources aligned with the long-term vision and enabled its systematic execution.

OOGP Research Contributed to Building Health Research Capacity

Capacity development is a key objective in the OOGP as well as in the new suite of programs. It is also a key priority for CIHR as evidenced in its Act, strategic plan (Roadmap II, Strategic Direction I) and training strategy. CIHR supports capacity development directly through grants and awards for individual researchers and trainees, and indirectly, through providing funding for research projects that develop capacity through the involvement of students, trainees and other researchers/stakeholders.

The definition of capacity development used in the previous evaluation included the direct involvement in the research process of any paid or unpaid staff or trainee including: researchers; research assistants, research technicians; Postdoctoral fellows, post-health professional degree students (e.g., MD, BScN, DDS), Fellows (not pursuing a Master's or PhD), Doctoral, Master's, and undergraduate student trainees. The same approach is adopted in the current evaluation along with the application of the CAHS Impact Framework. CAHS defines capacity building as the development and enhancement of research skills in individuals and teams. It is measured across three subcategories: personnel, additional activity funding, and infrastructure required for research.

Pillar 1 researchers received the majority of OOGP grants

Pillar 1 researchers received the majority of OOGP grants (around 72% out of 13,331 from 2000-01 to 2015-16), a proportion that has been consistent since 2002-2003. The percentage dropped slightly from 80% in the previous evaluation (CIHR, 2012). Pillar 2 researchers received 12% of the OOGP grants, Pillar 4 received 8.5%, while Pillar 3 received 6%. While grants were funded across all four pillars, some barriers may yet be limiting greater representation from Pillar 3 and 4 researchers. A range of barriers and challenges were identified for researchers from these pillars applying to the OOGP in the previous evaluation relating to peer review, renewal applications and success, and cross-disciplinary projects (Thorngate, 2002; Tamblyn, 2011; Tamblyn et al., 2016).

OOGP grants are contributing to capacity building

Almost all OOGP grants involved research staff (95%; total of 16,347 research staff) and trainees (97%; total of 28,237 trainees), thereby contributing to capacity building in health research (Table 4: Research Staff and Trainees Involved in OOGP Grants). The average number of staff members and traineesFootnote x contributing to the research conducted per grant overall was 13.5, which increased from 8.61 in the previous evaluation (2012). This is expected given that student enrolment in Canadian Universities has been over 2 million since 2011/12 and has been steadily increasing at rates of 1-3% per year (StatsCan, 2017). Therefore, the increase in the average number of researchers and trainees involved in OOGP grants is likely due in part to this increase.

It is important to note that the number of researchers and trainees provided are estimates based on end of grant reports from a sample of 29% of OOGP grants funded between 2000 and 2013. Therefore, the total number of research staff and trainees presented here is an underestimation. Not all NPIs who received an OOGP grant completed an end of grant report (it did not become mandatory until 2011, and there is still not 100% compliance). However, the current sample (n = 3,304 out of 13,331) is representative in terms of demographic characteristics of the population. The reports in our sample were submitted between 2011-12 and 2016-17. Therefore, although the total number of HQP trained or supported over this period provides understanding about the number involved and trained through OOGP grants, averages may not be representative of the population of HQP, and total numbers are an underestimation of the population.

One-half to one-third of grants involved research staff

More than half of the sampled grants (out of 3,304) involved research staff (researchers: 56%; research assistants: 62%; research technicians: 63%), for a total of 16,347 (Table 4: Research Staff and Trainees Involved in OOGP Grants). Each grant involved more researchers (M = 4, both paid and unpaid) compared to research assistants (M = 3) and technicians (M = 2).